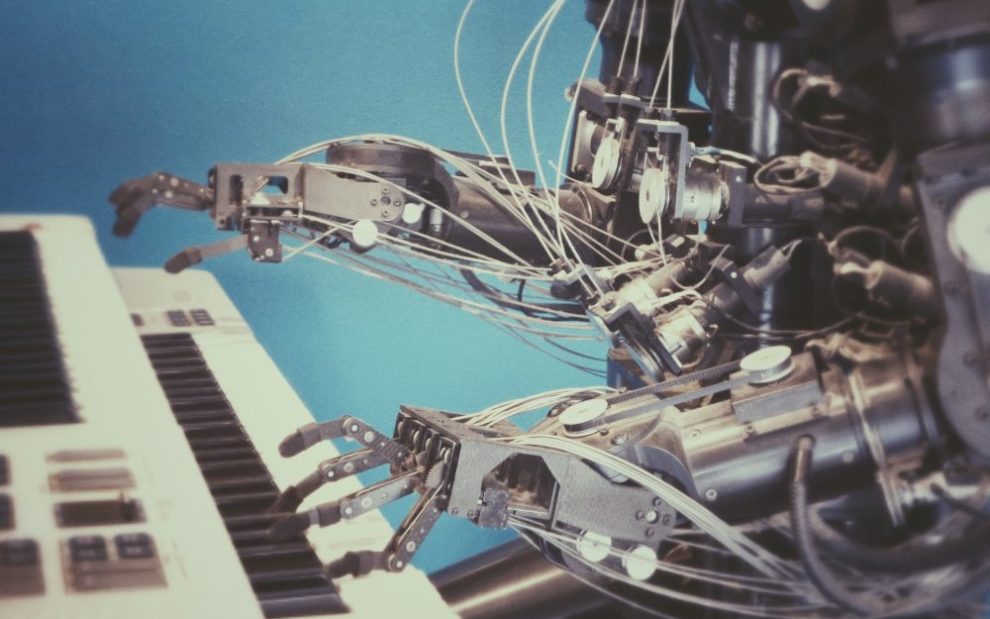

In 2011, at age 27, I experienced a crisis of faith. The man I loved and hoped to marry—a very scientifically-minded software engineer—joined a group of other mostly young, male software engineers who wanted to use scientific and logical thinking to do good for others. As noble as this goal may sound, the group caused much anxiety for me, particularly due to its strong anti-religious bent. The most unsettling aspect of the movement was its preoccupation with the technological singularity: the idea that, eventually, artificial intelligence (AI) will surpass human capacity with irrevocable consequences.

My anxiety over AI led me down a path I don’t look back on fondly. I spent that summer devouring writings by futurists like Ray Kurzweil and Nick Bostrom, obsessively talking about the singularity to anyone who would listen, and getting into absurd fights with my partner: “Promise me you won’t upload your brain into a machine in 2045 when the singularity comes!” I insisted. “I can make no such promises!” he replied.

Unsurprisingly, the relationship did not work out (though we did eventually calm down enough to reconnect as friends). But at age 27, the idea of an AI able to do everything I did and more was extremely frightening, particularly to me as a person of faith. Weren’t humans uniquely made in God’s image? If machines could eventually become conscious, what would that mean about the nature of the soul? If it were possible to upload our consciousness into machines, creating a kind of artificial afterlife, what would that imply about the heaven we Christians hope for? The idea of AI forced me to face an uncomfortable possibility: maybe God really was a delusion. Maybe Richard Dawkins and his fellow materialist atheists were about to be proven right.

Twelve years later, my attitudes have shifted. My faith was rekindled and strengthened; after all, science and religion can and do comfortably coexist in complementary but distinct domains. After the United States presidential election of 2016 and the COVID-19 pandemic, my anxieties became much more focused on tangible current events than a theoretical robot apocalypse. In recent months, when ChatGPT and other innovations have caused concerns about AI to become mainstream, the questions I’m asking are less abstract and more practical than the ones I asked 12 years ago. How will AI change our society? How are we who identify as followers of Jesus called to respond to these changes? If a robot claims to be conscious, should it be treated as such? Should we treat an AI as a person with dignity and rights? Perhaps most important, what does it say about us—and what will happen to us—if we do not?

Human treatment of AI is the central theme of acclaimed British novelist Kazuo Ishiguro’s 2021 novel Klara and the Sun. Set in a near-future society where humans are losing their jobs to AI and well-off parents are opting to have their children genetically enhanced at birth to increase their chances of success in a hyper-competitive world, the novel is narrated by Klara, an AI built to be an “Artificial Friend” to one such child. In this world, genetically enhanced children are taught at home by remote tutors (a prescient scenario, given that the novel was published one year into the pandemic) and rely on Artificial Friends to accompany them and help them develop social skills in preparation for college.

Klara begins her conscious life in a store under the tutelage of a human manager, is bought to accompany a 14-year-old girl named Josie, and develops keen powers of observation of the world around her. Through Klara’s insights, Ishiguro holds a mirror up to human society, suggesting that the real problem with AI lies in the humans who build and use it. As Joshua K. Smith has stated in his recent interview with this magazine, “Many times the vision of modern robotics is to invent a type of slave.” Historical and fictional accounts of slavery—from Twelve Years a Slave to The Handmaid’s Tale—reveal so poignantly that in societies based on oppression, everyone suffers—even those who think they’re at the top.

Through his depiction of the humans’ treatment of Klara, Ishiguro exposes and critiques our all-too-human tendency to exploit others. When Josie’s mother holds an “interaction meeting” (basically, a party where teenagers undergoing home tutoring can practice their social skills) her peers set out to abuse Klara. When, waiting for a cue from Josie, Klara initially refuses to answer the children’s questions, one of them, Danny, proposes throwing her into the air to see if she’ll land on her feet. “Throwing AFs across the room. That’s evil,” one girl protests. “You’re being soft,” the boy replies.

This bullying is stopped not by Josie, but by her childhood friend Rick—the only person in her circle who has not been subjected to genetic modification. Previously wary of Artificial Friends, Rick now identifies with Klara’s vulnerability and outsider status. After Rick challenges and embarrasses Danny, the latter’s mother turns on him. “You shouldn’t be here at all,” she exclaims, suggesting that the party is only meant for children who are genetically modified. Oppression and exclusion are deeply ingrained into this society with or without Artificial Friends, who serve more to reveal dark human tendencies than cause them.

Other instances of maltreatment of Klara occur when Josie’s mother suggests a sinister possibility of using her as a possible replacement for Josie. The genetic modification process, though never fully explained in the novel, is shown to be extremely dangerous. We are told that Josie’s older sister died in the process, and Josie has been left fragile and ill. A scientist named Mr. Capaldi—clearly the villain in the story—is aiming to transform Klara into an artificial replica of Josie in the case of her death. Disturbing on multiple levels, this proposal raises the question of personal dignity. While Ishiguro self-identifies as agnostic, the moral questions he raises are resonant with Catholic thought on human dignity and respect for life. Would it be morally acceptable to replace a dead child with a robot replica? Would that help the mother to grieve or plunge her into denial? Would it be honoring or dishonoring Josie’s memory? And, how would it harm Klara, who is a being in her own right, to be made to embody another?

When I assigned this book in a British literature class at the Catholic college where I teach, I framed the discussion in terms of Catholic teachings around the dignity of the human person. One student wrote an impassioned critique of Capaldi’s treatment of Klara in terms of Christian personalism. Though not human, Klara demonstrates her personhood in countless ways: her devotion to Josie, her desire for connection with others, her love of learning, and ultimately her own form of religiosity and spirituality (as a solar-powered being, she worships the sun as a source of strength, a relationship that becomes climactic to the novel). As in many science fiction stories, she becomes, in some ways, more human than the humans—an irony at the core of Ishiguro’s cautionary tale.

We don’t need artificial friends to know that the tendency to abuse, exclude, and exploit the vulnerable is endemic to our human condition. While new technologies such as AI hold the potential to help us solve many of our problems, ultimately, the choice of how to engage with them is ours. The industrial revolution helped us to eradicate disease and elevate humanity’s overall standard of living, but it did little to promote equality and justice (at least, not until the labor movement sprung up in reaction to it). The Catholic Church responded to the social changes of the industrial age by developing a body of writings—such as Pope Leo XIII’s 1891 encyclical Rerum Novarum (On Capital and Labor)—elevating such principles as solidarity, the dignity of all humans, and the rights of workers.

As our world braces for another major technological shift, Catholics have the opportunity to remind ourselves and others of these principles as we discern how best to respond. From the start of this century, the Internet and smartphones have helped us to connect instantly with people all over the world, but as MIT psychologist Sherry Turkle has explored, we are increasingly likely to ignore the people right in front of us.

Like every new technology, AI holds the power to bring out our best and worst qualities. Like every new technology, AI will create problems requiring innovative solutions, perhaps radically altering our economic and social systems. But amid all this change, we need not let ourselves slide into a dystopian scenario, whether the blatant harshness of the worlds created by a writer such as Philip K. Dick (popularized in the Blade Runner movies) or the more subtle cruelty that Ishiguro presents in Klara and the Sun. Instead, through careful discernment and a reexamination of our Catholic social teaching on human dignity, we hold the power to choose wisely, influencing AI and other new technologies toward the good of all affected—including the new beings we create.

Unsplash/Possessed Photography

Add comment